Last month I wrote about my Twitter package for Mathematica. Shortly thereafter I wrote a similar post for my company's blog. That post seems to have been well received and has generated quite a bit of interest on Twitter.

Search

I have continued to add useful features to the package, including the ability to search Twitter.

<<Twitter`

Column[Take[TwitterSearch["twitter mathematica"], 5], Dividers->All]

| TwitterStatus[<netzturbine: ‚ô∫ @imabug cool, mathematica + the twitter API http://bit.ly/1Wv0iV - should work w/ !laconica 2>] |

| TwitterStatus[<imabug: cool, mathematica and the twitter API http://bit.ly/1Wv0iV>] |

| TwitterStatus[<kdrewien: RT @PragueBob Wolframs mainstream Mathematica software is plugging into Twitter: http://cli.gs/257htM Geeky!>] |

| TwitterStatus[<lunajade: How to Twitter with Mathematica and analyze the data... http://bit.ly/14WA8F (via @WolframResearch) [VERY interesting...]>] |

| TwitterStatus[<pythonism: http://twitter.com/MikeCr/statuses/1835493378 "@ruby_gem Mathematica, firefox, python">] |

Adding this functionality was actually a little more difficult than it should have been because the Twitter search API returns a different flavor of XML (ATOM) than the regular Twitter API.

Also, I renamed the HTTP.m package (which was used by Twitter.m) to WebUtils.m and I added some other useful functionality, including the ability to interact with a few popular URL shortening/expanding services. This has enabled some interesting possibilities.

Tweet cache

As you may already know, Wolfram|Alpha launched this past weekend. The website went live on Friday evening and the official launch was Monday afternoon. Sometime Friday afternoon I started running a short Mathematica program that used the TwitterSearch[] function to download all tweets mentioning Wolfram|Alpha and stuff them into an SQLite database. The program is still running, downloading new tweets as they happen.

db = Database`OpenDatabase["Twitter.sqlite"]

Database`Database[Twitter.sqlite]

Database`QueryDatabase[db, "CREATE TABLE tweets (id INTEGER PRIMARY KEY, text TEXT, source TEXT, created_at DATE, in_reply_to_status_id INTEGER, in_reply_to_user_id INTEGER, in_reply_to_screen_name TEXT, user_id INTEGER, user_screen_name TEXT, user_name TEXT, user_profile_image_url TEXT);"];

TwitterStatusDateDBString[status_TwitterStatus] :=

DateString[TwitterStatusDate[status], {

"Year", "-", "Month", "-", "Day", " ",

"Hour", ":", "Minute", ":", "Second"

}];

DateString[TwitterStatusDate[status], {

"Year", "-", "Month", "-", "Day", " ",

"Hour", ":", "Minute", ":", "Second"

}];

InsertTweet[db_Database`Database, status_TwitterStatus]:=Module[{query, user, vals}, query = "INSERT INTO tweets (id, text, source, created_at, in_reply_to_status_id, in_reply_to_user_id, user_screen_name, user_name, user_profile_image_url) values (?, ?, ?, ?, ?, ?, ?, ?, ?)";

user = TwitterStatusUser[status];

vals = {

TwitterStatusID[status],

TwitterStatusText[status],

TwitterStatusSource[status],

TwitterStatusDateDBString[status],

TwitterStatusReplyID[status],

TwitterStatusReplyUserID[status],

TwitterUserScreenName[user],

TwitterUserName[user],

TwitterUserProfileImageURL[user]

};

Database`QueryDatabase[db, query, vals]

];

user = TwitterStatusUser[status];

vals = {

TwitterStatusID[status],

TwitterStatusText[status],

TwitterStatusSource[status],

TwitterStatusDateDBString[status],

TwitterStatusReplyID[status],

TwitterStatusReplyUserID[status],

TwitterUserScreenName[user],

TwitterUserName[user],

TwitterUserProfileImageURL[user]

};

Database`QueryDatabase[db, query, vals]

];

query = "wolframalpha OR wolfram_alpha OR \"wolfram alpha\"";

TwitterSearchSince[query_String, id_Integer]:=Module[{tweets = {}, lastCount = - 1, page = 1},

While[Length[tweets] =!= lastCount,

lastCount = Length[tweets];

tweets = Join[tweets,

TwitterSearch[query, "Results"->100, "Since"->id, "Page"->page++]

]

];

tweets

];

While[Length[tweets] =!= lastCount,

lastCount = Length[tweets];

tweets = Join[tweets,

TwitterSearch[query, "Results"->100, "Since"->id, "Page"->page++]

]

];

tweets

];

since = TwitterStatusID[First[TwitterSearch[query]]];

UpdateCache[]:=While[True,

tweets = TwitterSearchSince[query, since];

Monitor[

Do[InsertTweet[db, tweets[[i]]], {i, Length[tweets]}], ProgressIndicator[Dynamic[i/Length[tweets]]]

];

since = If[Length[tweets]>0, TwitterStatusID[First[tweets]], since];

Print["added ", ToString[Length[tweets]], " tweets to database at ", DateString[]];

Pause[30]

];

tweets = TwitterSearchSince[query, since];

Monitor[

Do[InsertTweet[db, tweets[[i]]], {i, Length[tweets]}], ProgressIndicator[Dynamic[i/Length[tweets]]]

];

since = If[Length[tweets]>0, TwitterStatusID[First[tweets]], since];

Print["added ", ToString[Length[tweets]], " tweets to database at ", DateString[]];

Pause[30]

];

UpdateCache[]

I chose SQLite because it's easy to use, it's included with Mathematica (though possibly undocumented), it can be accessed easily via a command line tool, and it can be safely accessed by multiple processes at the same time. I started the program five days ago and it's still running. I am able to query the database from a different Mathematica process without interrupting the tweet downloads.

Tweet rate

So, from the other instance of Mathematica I am able to do things like this.

db = Database`OpenDatabase["Twitter.sqlite"]

Database`Database[Twitter.sqlite]

Length[ids = Database`QueryDatabase[db, "select id from tweets"]]

62659

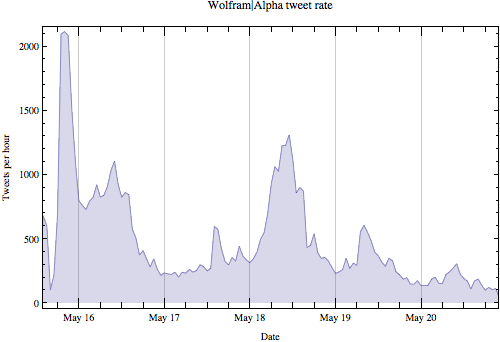

62,659 tweets mentioning Wolfram|Alpha between Friday and Wednesday of launch week. Let's take a look at the timeline. First, grab the creation date of each tweet in the database.

dateStrs = Database`QueryDatabase[db, "select created_at from tweets"];

Convert the strings into Mathematica DateList[] notation.

dates = Monitor[Table[DateList[dateStrs[[i, 1]]], {i, Length[dateStrs]}], ProgressIndicator[Dynamic[i / Length[dateStrs]]]];

Tally the number of tweets per hour.

tally = Tally[{#[[1]], #[[2]], #[[3]], #[[4]], 0, 0}&/@dates];

DateListPlot[tally, Joined->True, FrameLabel->{"Date", "Tweets per hour"}, PlotRange->{{First[dates], DatePlus[DateList[], { - 1, "Hour"}]}, Automatic}, PlotLabel->"Wolfram|Alpha tweet rate", Filling->Axis, ImageSize->{500, Automatic}]

There are large spikes in tweets per hour around the time the website went live on Friday evening, and again when the site officially launched on Monday.

Tweet links

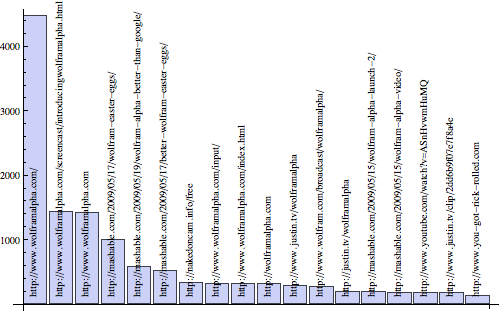

Since many people post URLs in their tweets it might be interesting to take a look at these to see which web pages and blogs about Wolfram|Alpha are generating the most interest.

tweets = Database`QueryDatabase[db, "select text from tweets"][[All, 1]];

There is a wide variation in the way people post URLs to Twitter, so unfortunately I couldn't find a single regular expression that would find every single one of them. This one works reasonably well.

Length[urls = Flatten[StringCases[#, "http://"~~Except[">"|"]"|"\""|"'"|","|WhitespaceCharacter]..]&/@tweets]]

37565

Length[tally = Tally[urls]]

17969

So there appear to be 37,565 links posted, 17,969 of which are unique. The thing about these URLs is that many use URL shortening services. So it's quite possible many shortened URLs point to the same destination URL. No matter. We can use the URLExpand[] function in my WebUtils package to expand URLs from many of the common URL shortening services.

Unfortunately, that much network traffic takes a long time. So let's cache the results as a list of rules so we can avoid future lookups of the same short URL if possible.

urlMap = {};

expandURL[url_String] := Module[

{newurl},

newurl = url /. urlMap;

If[newurl === url,

newurl = URLExpand[url];

If[newurl =!= url, AppendTo[urlMap, url->newurl]];

];

newurl

];

{newurl},

newurl = url /. urlMap;

If[newurl === url,

newurl = URLExpand[url];

If[newurl =!= url, AppendTo[urlMap, url->newurl]];

];

newurl

];

This expansion takes quite some time.

expanded = Monitor[Table[expandURL[urls[[i]]], {i, Length[urls]}], ProgressIndicator[Dynamic[i / Length[urls]]]];

Length[tally = Reverse@SortBy[Tally[expanded], Part[#, 2]&]]

14609

Let's take a look at all of the expanded URLs which were posted more than 100 times.

BarChart[Labeled[Hyperlink[#[[2]], #[[1]]], Rotate[#[[1]], Pi / 2], {Bottom}]&/@Cases[tally, {url_String, n_Integer}/;n>100], ImageSize->{500, Automatic}]

Grid[{#[[2]], Hyperlink[#[[1]]]}&/@Take[tally, 30], Dividers->All, Alignment->{{Right, Left}}]

So we have a whole bunch of links directly to the Wolfram|Alpha website, a bunch links to the screencast, a lot of links to some Easter eggs, a porn site (hmmm...), the justin.tv broadcast, Rick-Roll URLs, blog posts, etc. Interesting stuff.

Downloads

Download WebUtils.m (required by Twitter.m).

2 comments:

Hi

I have problem.Sqlite works ok about 2mnths after this standard code return:Database`Database::notobj: db is not a valid Database object. -strange coz in kernel window comand code works ok for select.Any idea whats is wrong?Math 8.0.4.0 W7 64.

No, I don't. Sorry!

Post a Comment